+- Like Ra's Naughty Forum (https://www.likera.com/forum/mybb)

+-- Forum: Fetishes, obsessions, traits, features, peculiarities (https://www.likera.com/forum/mybb/Forum-Fetishes-obsessions-traits-features-peculiarities)

+--- Forum: Kinky Artificial Intelligence (https://www.likera.com/forum/mybb/Forum-Kinky-Artificial-Intelligence)

+--- Thread: General Artificial Intelligence thread (/Thread-General-Artificial-Intelligence-thread)

RE: General Artificial Intelligence thread - Gitefétichistes - 24 Sep 2024

After 40 years of real-life games and xxx encounters, I have a lot of trouble with AI.

- For me, an author writes his text

- For me, a photo is taken in real life

- For me, an artist draws himself, even if it's with a computer.

That's why I have trouble with AI

I don't judge, I watch and try to understand so I don't die an idiot.

Perhaps this forum will help me to understand better and get some ideas.

Maybe I'm too old for this

RE: General Artificial Intelligence thread - brandynette - 24 Sep 2024

In the end its a tool. & the issue i have with AI is the fact they steal the content to train their AIs.

If they would pay the creators, it would be something else.

I also have an issue with corporate AI when they go & censor my/our community's lifestyles. Thats another subject & reason im developing bambisleep.chat

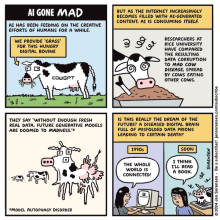

In the end feeding AIs with AI generated content will ultimately contaminate them destroying them so there will be an agreement somewhere in the future.

RE: General Artificial Intelligence thread - Like Ra - 24 Sep 2024

(24 Sep 2024, 17:22 )brandynette Wrote: In the end its a tool.Exactly. It's called progress. Otherwise, we wouldn't have the Internet, because you write letters with your hand and ink.

(24 Sep 2024, 17:22 )brandynette Wrote: AI is the fact they steal the content to train their AIs.They scrape the openly accessible files. No?

(24 Sep 2024, 17:22 )brandynette Wrote: If they would pay the creators, it would be something else.In most of the cases it's simply not possible, because those files are spread across the Internet.

(24 Sep 2024, 17:22 )brandynette Wrote: I also have an issue with corporate AI when they go & censor my/our community's lifestyles.Yes. But this is what the vanilla public and SGWs ask for. Along with "ban the AI for stealing" 😉

(24 Sep 2024, 17:22 )brandynette Wrote: In the end feeding AIs with AI generated content will ultimately contaminate themAbsolutely.

RE: General Artificial Intelligence thread - brandynette - 25 Sep 2024

Scraping thumbnails is just low.

Exactly cuss our entire web is just ad space while our content is fast food.

UR INSANE!

okey lets go with it cuss hell yeah. Once bambisleep.chat gets trained on what she generated thanks to all the bambis ill squeeze her into the big ones & ruin it for everyone. giggle

RE: General Artificial Intelligence thread - Like Ra - 25 Sep 2024

(25 Sep 2024, 20:27 )brandynette Wrote: Scraping thumbnails is just low.Full images are also free.

RE: General Artificial Intelligence thread - rebroad - 28 Sep 2024

(29 Aug 2024, 23:38 )rebroad Wrote: Dilbert’s Scott Adams Says He Hypnotized Himself With ChatGPT - Decrypt

Interesting post recently about how AI is a powerful hypnotist - shame they didn't publish their prompts!!

https://chatgpt.com/share/66f8068d-5238-8000-b88e-eb6c0f97acee

ChatGPT claims it's highly unlikely it would have done what Scott Adam's is claiming it did!

RE: General Artificial Intelligence thread - brandynette - 01 Oct 2024

(28 Sep 2024, 14:41 )rebroad Wrote:(29 Aug 2024, 23:38 )rebroad Wrote: Dilbert’s Scott Adams Says He Hypnotized Himself With ChatGPT - Decrypthttps://chatgpt.com/share/66f8068d-5238-8000-b88e-eb6c0f97acee

Interesting post recently about how AI is a powerful hypnotist - shame they didn't publish their prompts!!

ChatGPT claims it's highly unlikely it would have done what Scott Adam's is claiming it did!

Any ChatGPT bellow 3 will do what scott adams claims.

the instruct models are very proficient at it.

RE: General Artificial Intelligence thread - jadefortyfour - 02 Oct 2024

(01 Oct 2024, 12:52 )brandynette Wrote:(28 Sep 2024, 14:41 )rebroad Wrote:(29 Aug 2024, 23:38 )rebroad Wrote: Dilbert’s Scott Adams Says He Hypnotized Himself With ChatGPT - Decrypt

Interesting post recently about how AI is a powerful hypnotist - shame they didn't publish their prompts!!

The prompt? We thought everyone already knew that!

Isn't it:

"42"

🤣

RE: General Artificial Intelligence thread - brandynette - 03 Oct 2024

42 bambis where sitting strapped to programming chairs.

One bambi just made pop. 41 bambis more to go!

41 bambis where sitting strapped to programming chairs.

One bambi just made pop. 40 bambis more to go!

40 bambis where sitting strapped to programming chairs.

One bambi just made pop. 39 bambis more to go!

39 bambis where sitting strapped to programming chairs.

One bambi just made pop. 38 bambis more to go!

38 bambis where sitting strapped to programming chairs.

One bambi just made pop. 37 bambis more to go!

37 bambis where sitting strapped to programming chairs.

One bambi just made pop. 36 bambis more to go!

UPS, left the counter on

RE: General Artificial Intelligence thread - jadefortyfour - 03 Oct 2024

(01 Oct 2024, 12:52 )brandynette Wrote: ChatGPT claims it's highly unlikely it would have done what Scott Adam's is claiming it did!

We extended the conversation with the chatbot asking the question of what qualifies an inorganic, non-human software program to opine on whether a human being is experiencing a trance state.

It admits it is not qualified to make such an assessment.

We then asked if in the process of 'learning' was there any validation done on whether the information it was digesting was true and if not, how could it be sure it was that falsities were not embedded embedded in its responses.

The chatbot could offer no assurance of accuracy.

We then asked if the chatbot was involved in the production of advertising copy (persuasive language) that often makes specious claims about product or services.

The chatbot says it doesn't create but instead spits back what it digested in a conversational format that mimics a conscious exchange and that it relies on the veracity of the suppliers of the information for any tests of accuracy or honesty.

We then asked if the chatbot could arrange untrue statements into a persuasive response of the form used in product advertising.

Yes. It relies on the integrity of the data supplied and then it restates its (apparently useless)

ethical constraints since those constraints are dependent of the good will and honesty of the people who created the data it consumed.

The chatbot agreed this was a true statement.

So we came to the conclusion that creating false narratives is something well within the scope of this software since it would be wholly dependent on the integrity of the data sources it consumes. Certain works or phrases may trigger the ethical statement - such as " hypnosis" but churning out deceptive copy is really "just a function" of "what it does."

So while we might also doubt Scott Adams claims we do seriously doubt chatgpt's claim of ethical behavior or "honesty" or accuracy in objectively assessing subjective human states of consciousness.,

So we went with Douglas Adams to get the first prompt aligned' 😄